Turning AI-generated glitchy dance videos into pen-plotted album art

When POLAAR commissioned me to create the album cover for Soreab, a UK techno producer, they asked for something in the spirit of my "Turntable Anatomy" series (a pen plotted series of generative modular grid art). But this project would take me somewhere else: into the intersection of AI glitchy hallucinations, contemporary dance theory, and the physical permanence of ink on paper.

The album is called "CU" (Completely Unstable). Talking with Soreab about his creative process, I learned that his music is born in moments of mental difficulty. For him, production isn't as much creative expression as it is a therapeutic act. A ritual that transforms instability into something stable.

Feeling bad → Producing music → Feeling better.

This repeating cycle of losing balance and finding it again immediately became the leading inspiration to produce the cover of his album.

Out of the many ideas I had, I kept thinking about Doris Humphrey's "Fall and Recovery" modern dance technique. And that's where this project really began.

Fall and Recovery: A Dance Technique as Metaphor

Doris Humphrey (1895-1958) was a pioneering American modern dancer and choreographer who developed the "Fall and Recovery" technique. It is one of the foundational principles of modern dance that is still taught to this day. The concept is elegant: deliberately move away from a position of balance, then return to it.

The technique is simple but profound. We constantly fall out of balance (emotionally, mentally, physically) and spend our lives finding ways to recover.

The parallel with Soreab’s creative process was obvious. This cover would be about the movement from imbalance to stability.

There was just a little problem.

The Search for Source Material

I started looking for videos of Fall and Recovery performances on YouTube. I wanted clear, isolated shots of dancers performing the technique. What I found was disappointing: mostly archival footage, ensemble pieces where individual movements were hard to isolate, or demonstrations that didn't capture the full dramatic arc of the fall-and-recovery cycle.

The material I was looking for didn't exist. At least, not in a form I could reliably use.

What if I generated them?

Enter the Glitch

Earlier in April, I discovered Rosa Menkman, an artist whose practice centers on glitch aesthetics and its relationship with our modern society.

While Generative AI keeps improving, there’s still plenty of glitchy components to them. Models are well-known to have trouble rendering physically correct images or scenes. When they can do it reliably, we are very impressed.

Here, glitch actually made sense. This was perfect for what I was trying to express. With hallucinated physics or uncanny moves defying human anatomy, I could maybe have even more interesting results. The glitch as a metaphor just clicked.

Generating Dance Videos with Google Veo

I opened the Google Cloud Console to start experimenting with Google’s Veo 2 model. Immediately, I had questions: Would this kind of specific dance technique even be in the training data? What would an AI's interpretation of "fall and recovery" look like? Would it understand the intentionality of the movement, or would it just generate people falling down?

The prompts were fairly straightforward, with specific constraints.

“Please generate a video of a single person performing Doris Humphrey’s fall and recovery modern dance technique. Fixed camera angle showing the full stage. Clean, uncluttered background. Full body visible throughout”

I generated multiple videos, experimenting with different prompt variations. The cost added up by the time I had a collection I was happy with (~$100). But the results were fascinating, and to be honest, quite funny.

Veo 2's imperfections immediately became features. The dance moves were off, with impossible movements and very little elegance. It felt like Veo 2’s limits were immediately hit. None of these were the traditional fall and recovery moves that I had in mind.

I also experimented with Veo 3, and while it showed less anatomical quirks, the impossible movements were still there.

The AI had created exactly what I needed: a visual representation of instability that still captured the essential quality of the fall-and-recovery movement.

Now I needed to create the link between these generated videos and my pen plotter.

Pose Estimation: Translating Motion to Data

I had my source material made of AI-generated dancers moving through (latent) space. The next step was converting the visual information into geometric data I could plot on paper.

What is Pose Estimation?

In computer vision, a "pose" refers to the configuration of a body or object in three-dimensional space. Pose estimation is the process of detecting and tracking key anatomical landmarks (joints, limbs, the head, torso) and representing them as a connected set of points in space.

There are several modern pose estimation models available. Meta recently released Sapiens, which offers high-accuracy pose detection. OpenPose has been around for years. There are various commercial APIs that promise high-fidelity results.

I decided to use MediaPipe.

Why MediaPipe?

Google's MediaPipe is a set of machine-learning models optimized for edge computing. These models aren’t designed to be the most accurate. They are designed to run efficiently on devices with limited processing power. This means they’re fast.

I had briefly looked at Sapiens. It's technically impressive and well-marketed, but getting it running was annoying. More importantly, I didn't want something too perfect. I was working with hallucinated videos. I wasn’t looking for the most accurate model out there, but the easiest to use. I would gladly accept some glitches in the pose estimation detection.

MediaPipe’s output was perfectly acceptable to me. It faithfully tracked the poses as they appeared in the videos. It missed some poses sometimes, but that created an interesting visual result. MediaPipe was the perfect tool to use. The tool and the source material matched each other's level of imperfection.

Building Choreopath

Here's where I need to confess something: I don't particularly like Python. I don’t like the syntax, the import system, and the overall feel of the language. Yes, Python makes me sad. It's not a strong preference. I just find myself more comfortable in other languages. There’s no denying that Python has the best ecosystem for machine-learning or AI related tasks. So Python it was.

Rather than wrestling with it myself, I took a different approach. I asked Claude (yes, an AI) to generate a set of scripts that would:

- Take a video file as input

- Extract every single frame with OpenCV

- Run MediaPipe pose estimation on each extracted frame.

- Convert the pose landmarks to continuous SVG paths

- Output a complete SVG file that I could use in other software such as Inkscape.

This is what vibe coding is to me. Giving an AI assistant a clear specification and letting it handle the implementation details. It’s an amazing tool to quickly get to a result on a set of previously unknown APIs. Claude generated the initial script, I refined it, added features, and eventually turned it into a proper tool I called Choreopath.

Choreopath does more than just basic pose extraction. It includes:

- Video processing: Every single frame is processed for pose extraction. There is no interpolation.

- CSV output: Poses are extracted to a CSV output that can then be transformed into another format.

- Inkscape-compatible SVG generation: Clean vector path output suitable for pen plotting, layered by landmark groups.

- Overlay rendering: Preview the detected poses overlaid on the original video frames.

Processing the Videos

MediaPipe lives up to its reputation for speed. Processing a full video is a very quick process (~5 seconds for an 8-second video, on my M3 MacBook). It meant that the feedback loop was very short. I could iterate quickly, experimenting with different videos.

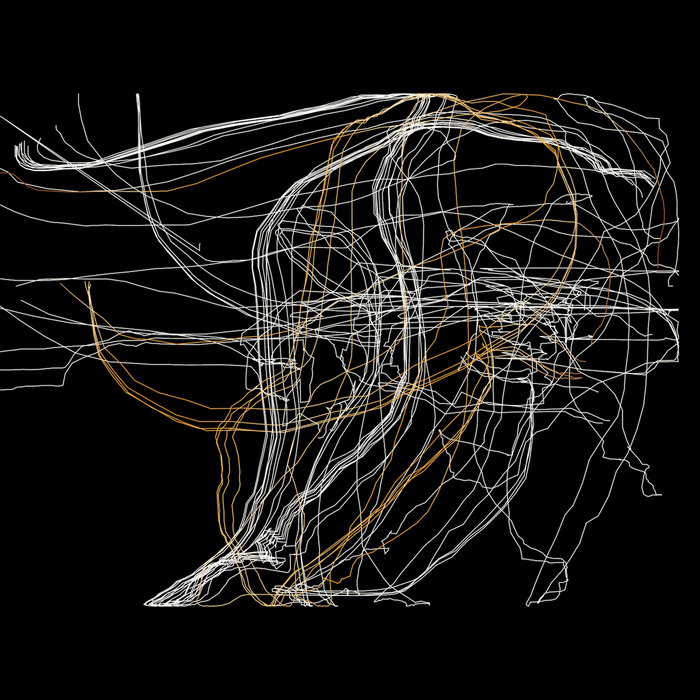

I was really excited by the output. Hundreds of points, each slightly offset from the last, creating these flowing rivers of lines that traced the movement through space. It looked like a long-exposure photograph of limbless motions, constructed from discrete moments, each one individually calculated and traced.

Most importantly, I had my SVG files in hand. Time to plot.

From Digital to Physical: The Pen Plotter

I had SVG files made of pure vector data describing movement through space. The final step was the most critical: translating this digital information into physical form.

The glitchy, unstable AI-generated motion would become permanent, stable, physical ink on paper. In a way, this is also a metaphor for how a brain calms itself down by focusing on physical realities.

The Plotter: UunaTek iDraw H

I use a UunaTek iDraw H pen plotter for my work. It's not the fanciest or most expensive plotter on the market, but it's fast, reliable, and handles A3 format, which is the size I find the easiest to work with. A3 papers are plenty and cost-effective to experiment with. It’s also pretty unusual to empty a pen cartridge on a single sheet of A3 paper.

Pen plotters are fascinating machines. They're essentially robots with precision motors that move a pen across a surface. Unlike printers that lay down toner or spray ink, plotters actually draw, moving the pen along paths just like a human hand would (but with inhuman precision and consistency).

This physical drawing process is important to the concept. Each line is laid down in real time, the pen moving across the paper, the ink flowing from physical contact between pen and surface. It takes time. It makes noise. The pen sometimes skips or bleeds slightly. It's an analog process with all the imperfections that implies. Even within the same series, one plot might be different from a second one.

The plotting process is influenced by the chosen speed. The pen will react differently if it is moved at high speed or low speed. Some pens will also react differently if the pen-up/pen-down motion is very quick or very slow. When working with a plotter, these choices are crucial to the end result and are entirely part of the creative process.

For this project, an A3 sheet took approximately 25 minutes to complete, drawing in total 47 meters of lines.

Color Choices: Black, White and Gold

I'm a big fan of Sakura Gelly Roll pens. They flow smoothly, have consistent ink coverage, and come in beautiful colors, including metallics that really pop on dark paper. I am also a big fan of heavy papers with a fine grain.

For this series, we decided on black paper as the substrate, white ink for the base structure, gold ink for emphasis and depth. That would give plenty of contrast, and I love contrast.

Each piece required three plotting passes:

- White base - The complete pose data in white, establishing the overall structure

- Gold layer - Selected body regions in gold, adding warmth and hierarchy

- Gold reinforcement - A second gold pass over them to deepen the color and create emphasis

Challenges and Lessons

Aesthetic Control over Multiple Media

My biggest concern going into this project was creating a piece that could work on 3 different media: printed as a vinyl cover, plotted on 2 different paper sizes, and used as a digital illustration for all streaming platforms.

We chose to print the vinyl cover with a Pantone Metallic Coated ink (10122C, for you Pantone nerds). It will mimic the metallic gold effect of the plotted pieces.

The earliest experiments showed that the iDraw plotter exerts too much pressure. In some pen and paper configurations, the pen could end up damaging the paper. Removing a small spring helped decrease the pressure.

It’s worth noting that we have no direct control over how the digital image will be processed by all the streaming platforms the album will be distributed to. There is no way to give them all the different formats they need. We have no choice but to surrender to their processing pipeline. Interestingly enough (or funnily enough), the digital realm is where we have the least control over how things will look.

AI in Creative Practice

This project sits at an intersection of human creativity and AI-generated content. The AI didn't create the final artwork, but it also wasn't just a tool I wielded with complete control. It was more like a collaboration with a partner who has very different capabilities and limitations than I do.

AI provided the ability to generate base material that didn't exist (fall and recovery dance performances), complete with aesthetic qualities (glitches and artifacts) that would be difficult to introduce intentionally through traditional means. It would be a whole new project and a whole different budget!

To be fair, I didn’t try to ask any AI about the creative direction to take for this project. Maybe an AI model could provide the conceptual through-line from Soreab's therapeutic music practice to Doris Humphrey's dance theory. Maybe it could make aesthetic judgments about which generated videos worked for the concept. Or maybe it could design the multi-color plotting strategy and decide that imperfection was actually perfection for this project. But what would be the point of it? Why would I want to surrender my brain’s creative output?

I can’t say for sure that the AI could not provide that kind of creative direction. But why would I want to not collaborate with other people to craft cultural artifacts that didn't exist before?

I’m much more interested in AI as a tool rather than AI as a replacement for connection and collaboration.

The Result

The final artwork captures the flowing motion from fall to recovery. It now serves as the cover for Soreab's "CU" album, released on POLAAR.

Limited editions are available: 10 A3 and 20 A5, all individually plotted. They’re sold unframed, signed and numbered on the back. They each come with an authenticity certificate.

Open Source: Choreopath

The entire pose-to-SVG pipeline is open source under an MIT license and available on GitHub. The code is yours to use, modify, and remix. The README includes installation instructions, usage examples, and documentation of the various options and features.

I'd love to see what you create with this technique. I think there are things to be done, such as transforming poses to modulate sounds in a DAW. Ultimately, the combination of pose estimation and data art opens up interesting possibilities beyond what I've explored here.

Closing Thoughts

What started as an album cover commission became an exploration of how AI can serve creative intent, how digital instability can be transformed into physical permanence, and how contemporary dance theory from the 1920s can find new expression through 2024's generative AI technology.

The tools change. The technologies evolve. But the human experiences we're trying to express remain constant. Instability, struggle, recovery, and balance are core tenets of the human experience. Sometimes the newest technology and the oldest art form can collaborate to express something timeless.

The album and plots are available for purchase on Soreab's Bandcamp page.

-

CU, by Soreab

soreab.bandcamp.com